machine learning - What are the advantages of ReLU over sigmoid function in deep neural networks? - Cross Validated

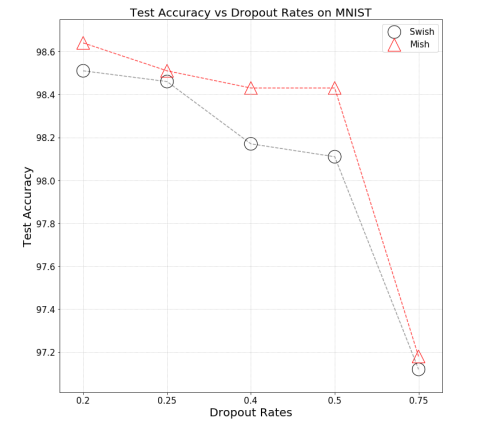

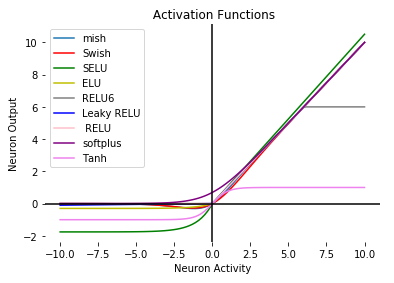

Swish Vs Mish: Latest Activation Functions – Krutika Bapat – Engineering at IIIT-Naya Raipur | 2016-2020

8: Illustration of output of ELU vs ReLU vs Leaky ReLU function with... | Download Scientific Diagram

Swish Vs Mish: Latest Activation Functions – Krutika Bapat – Engineering at IIIT-Naya Raipur | 2016-2020

Why Relu? Tips for using Relu. Comparison between Relu, Leaky Relu, and Relu-6. | by Chinesh Doshi | Medium

![Different Activation Functions. a ReLU and Leaky ReLU [37], b Sigmoid... | Download Scientific Diagram Different Activation Functions. a ReLU and Leaky ReLU [37], b Sigmoid... | Download Scientific Diagram](https://www.researchgate.net/publication/339905203/figure/fig3/AS:868603377225728@1584102591508/Different-Activation-Functions-a-ReLU-and-Leaky-ReLU-37-b-Sigmoid-Activation-Function.png)

Different Activation Functions. a ReLU and Leaky ReLU [37], b Sigmoid... | Download Scientific Diagram

Advantages of ReLU vs Tanh vs Sigmoid activation function in deep neural networks. - Knowledge Transfer

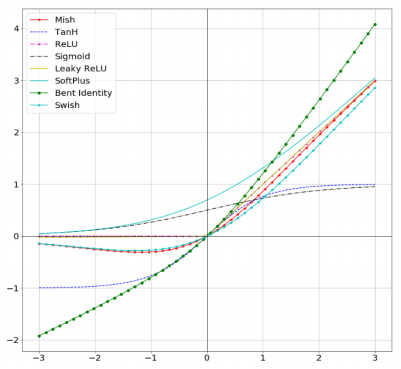

How to Choose the Right Activation Function for Neural Networks | by Rukshan Pramoditha | Towards Data Science

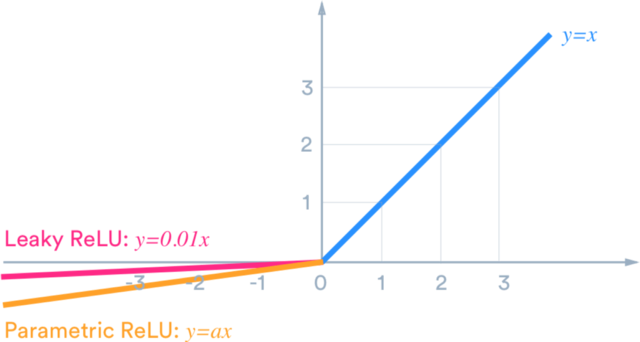

Activation Functions : Sigmoid, tanh, ReLU, Leaky ReLU, PReLU, ELU, Threshold ReLU and Softmax basics for Neural Networks and Deep Learning | by Himanshu S | Medium

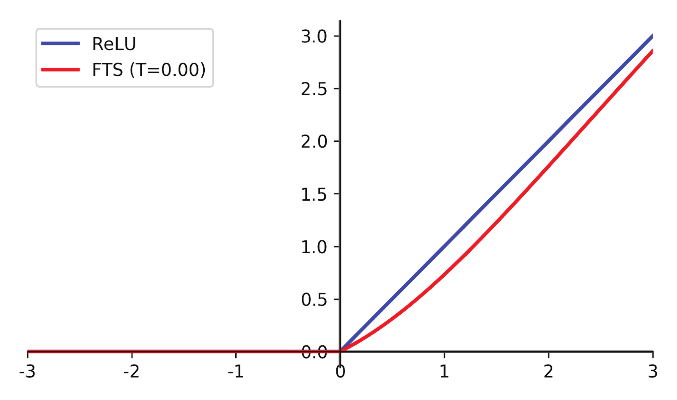

Flatten-T Swish: A Thresholded ReLU-Swish-like Activation Function for Deep Learning | by Joshua Chieng | Medium

What makes ReLU so much better than Linear Activation? As half of them are exactly the same. - Quora

What makes ReLU so much better than Linear Activation? As half of them are exactly the same. - Quora

Different Activation Functions for Deep Neural Networks You Should Know | by Renu Khandelwal | Geek Culture | Medium

Advantages of ReLU vs Tanh vs Sigmoid activation function in deep neural networks. - Knowledge Transfer